Document Classification by Combining Convolution and Recurrent Layers

Paper - arXiv

Hypothesis

- CNNs need to be very deep to be able to capture long term dependencies. Using RNN along-side CNN, one can capture long dependencies with significantly less number of parameters.

Interesting methods

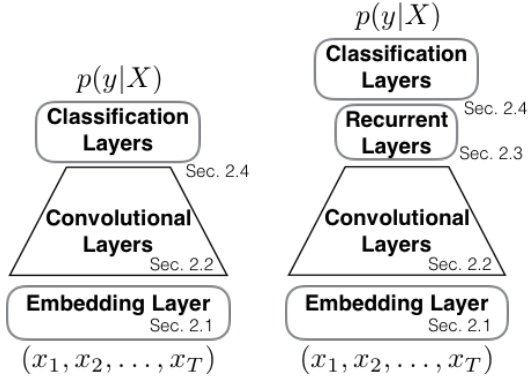

- They Add a recurrent layer on top of the conv later. Their model is character level.

Architecture:

- Details

- Motivation: CNN can learn to extract higher-level features that are invariant to local translation. By stacking multiple convolutional layers, the network can extract higher-level, abstract, (locally) translation invariant features from the input sequence.

- The recurrent layer is able to capture long-term dependencies even when there is only a single layer.

Results

- Compare their model with Zhang et al. (2015) and achive slightly better results.